Reasons for unrest: Using transformers to understand the reasons behind global protests

We have developed a technique to understand the dominant reasons for protests and demonstrations happening around the world. Our work builds on the ACLED dataset, a source that’s regularly quoted in the media and described by the Guardian as being “the most comprehensive database of conflict incidents around the world”.

ACLED helpfully collects all political violence and protest events but only provides a text description of the event which makes it hard to cluster together events that have a common theme and ask questions such as “how many BLM protests are happening per week?” or “what are the main issues that people are protesting about in Nigeria?”

In this article we’ll take the latter question —”what are the main issues that people are protesting about in Nigeria” — and we’ll provide a worked example, showing how we use state-of-the-art NLP techniques such as transformers and large language models to help us cluster similar events.

Methodology

Step 1: Explore and understand limitations of our source data

The first step of any data science project is always to consider the source of the data. It’s not just about how much trust we place in the authority that publishes the data, we should have a view on all the following dimensions in order to help us to interpret the data and understand the limitations of what it can tell us:

- Motivation for dataset creation

- Dataset composition

- Data collection process

- Data pre-processing

- Dataset distribution and licensing

- Dataset maintenance

- Legal and ethical considerations

We won’t cover all these aspects for ACLED here, but suffice to say that we believe that it is the best open dataset of protests that’s available to us. The most notable limitation is that ACLED researchers generally rely on media reports as their source so it’s quite likely that there will be omissions due to lack of coverage.

To get more insight into the composition of the dataset we’ll do some exploratory data analysis using Pandas.

ACLED helpfully splits events into different types, we’re only interested in protests so we filter down on those. We’ve got 223,747 protests globally since January 2016. We’re only interested in Nigeria so when we filter down geographically we have 2525. The sources are predominantly national newspapers in Nigeria.

Step 2: Extract the reasons for protests using question answering

The transformer architecture was first proposed in the 2017 paper Attention is All You Need and since then there has been a full-scale revolution in Natural Language Processing. The most well known transformer models include BERT and Open AI’s GPT-3 (indeed GPT stands for generative pre-trained transformer). GPT-3 received significant media attention upon release in June 2020 for it’s ability to[ generate coherent human-like writing](https://gpt3examples.com/) and it’s potential to generate a deluge of indistinguishable fake news.

We are harnessing the power of these large language models by using a pre-trained transformer model from Huggingface with a pipeline designed for question answering. This allows us to literally ask the question:

What is the reason for the protest?

The full description in the ACLED dataset may say:

On Jan 14, protests were held by residents of Arjun Nagar, Bathinda (Punjab), against overflowing sewage in the neighbourhood.

In this case our question answering task will return:

'overflowing sewage in the neighbourhood.'

Step 3: Cluster together similar protests

In order to see patterns we will need to cluster together similar protest events based on the reasons that we have extracted.

We use two different techniques in order to do this: topic modelling, and clustering. Short text topic modelling (STTM) is a very different problem to topic modelling for large documents. It’s necessary to create embeddings rather than use bag-of-words / TF-IDF based approaches in order to capture the semantic meaning of the text, not just the specific words used. We use a process that’s based on LDA but makes the assumption that there is only one topic in each document.

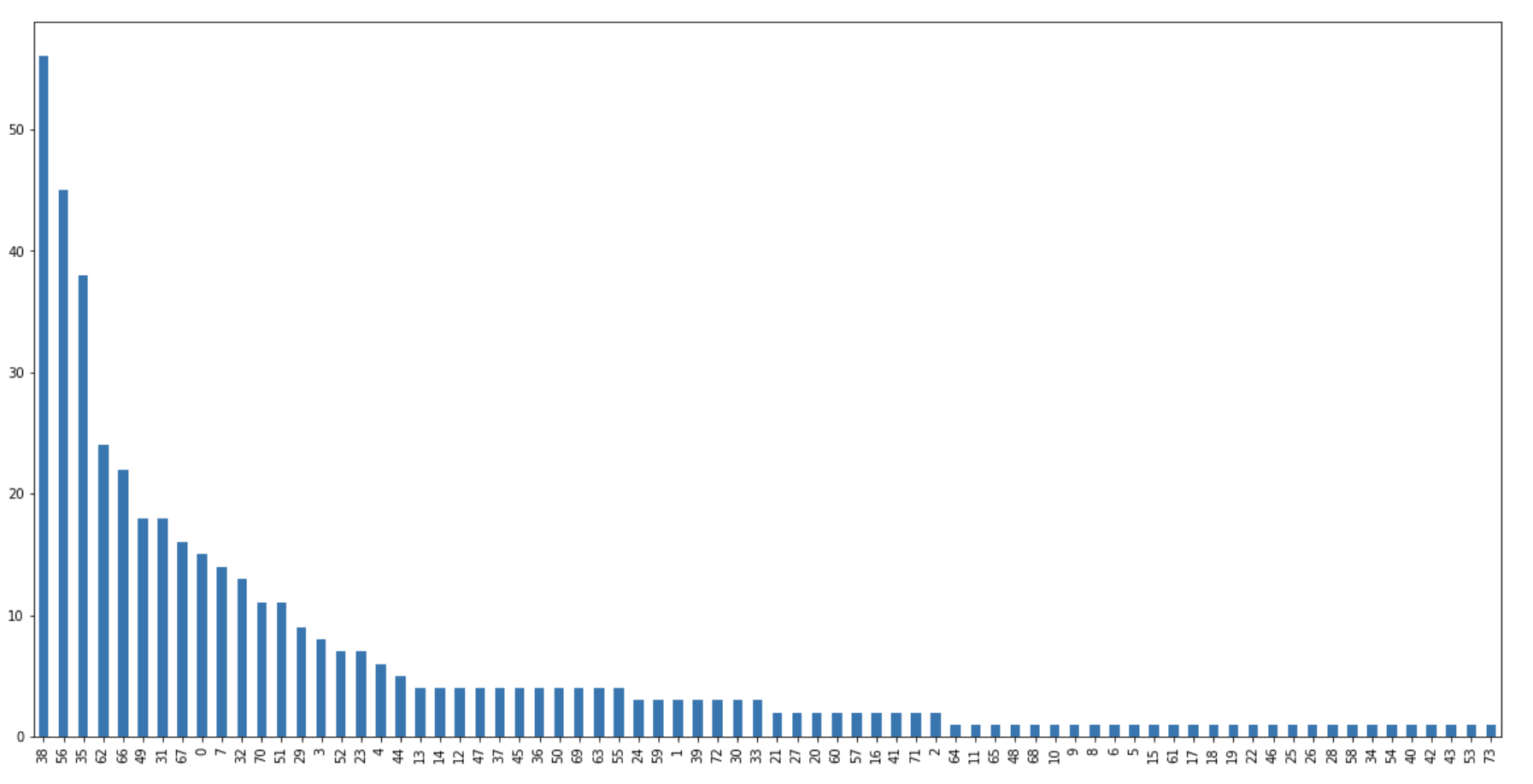

Bar chart showing number of protests per cluster

The chart above shows the distribution of protest events per topic cluster. There is a long tail of small clusters that we will ignore for now.

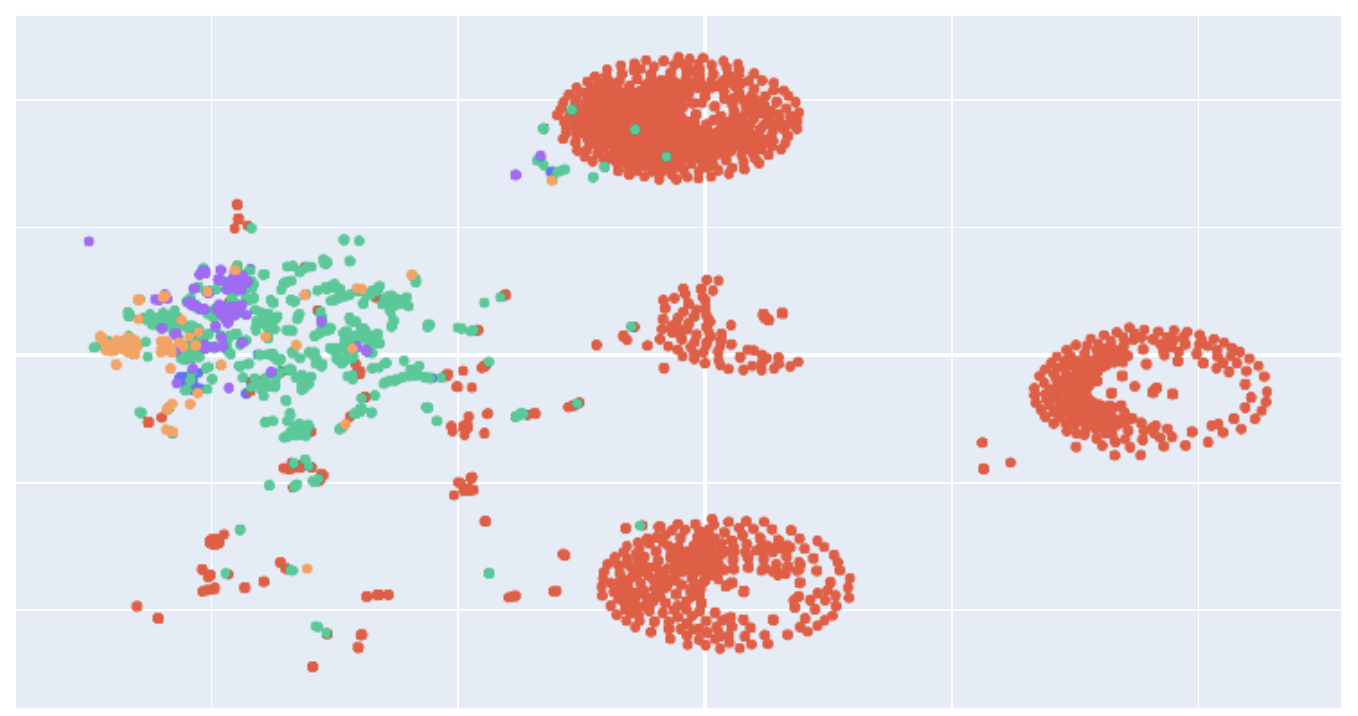

In addition to performing topic modelling, we also cluster the events by creating embeddings for each using Google’s universal sentence encoder, and then visualising them.

Results

In order to visualise the clusters, we project these high-dimensional vectors onto a 2D plane, the colours represent the clusters identified by topic modelling.

We can see clusters of protests emerge with the following themes:

38— Nonpayment of salaries, pensions and benefits

56— Gender-based violence and a small sub-cluster of violence against christians

35 — Violence and killings by state and criminal gangs

62— Corruption in the country

66 — Insecurity, governance, elections

31 — Illegal land acquisition and forceful eviction

67— Price hikes — fuel and electricity

Bonus : USA

Here’s a quick look at the clusters for the USA in 2020 — the red dots all relate to the Black Lives Matter movement, protests against the killing of George Floyd, or in support of police reform.

Conclusion

The techniques we have demonstrated here show promising results that can help us to understand the dominant topics and themes of protests and to quantify them, with an assumption of completeness in the underlying dataset.

There is a lot of further work that could be done to improve the performance of the question answering, topic modelling and clustering tasks, such as fine tuning the language models with domain-specific text. However a major bottleneck (as with most NLP systems) is in evaluation, while we have many machine metrics available, it is inevitably necessary to use significant amounts of human effort to evaluate the semantic coherence and separation of clusters.