AI Strategy Playbook: Finding High-Impact Use Cases

Every organisation knows AI could transform their operations. Few know exactly where to start.

The challenge isn't a lack of ideas - it's too many. Every department has suggestions. Every vendor has a pitch. And somewhere in the noise are the three or four opportunities that would actually move the needle.

This article outlines the structured discovery methodology we've refined over 10 years of AI delivery for organisations including the Cabinet Office, Unilever, and Bank of England. It's designed to cut through the hype and surface the use cases that matter.

Why Discovery Matters More Than You Think

The temptation is to skip straight to building. You've seen the demos. The technology clearly works. Why not just pick something and start?

Because the cost of solving the wrong problem is enormous - not just in wasted development, but in lost credibility. Failed pilots breed scepticism. And scepticism kills the momentum you need for genuine transformation.

When we worked with the Cabinet Office on AI for document management, we spent the first five weeks purely on discovery - engaging with over 40 stakeholders across 13 government departments. That investment paid off. The resulting solution achieved an 80% reduction in incorrectly identified documents and was described by Luke Sands, Head of Digital at Cabinet Office, as "the best use of AI I've seen within government."

Discovery isn't delay. It's de-risking.

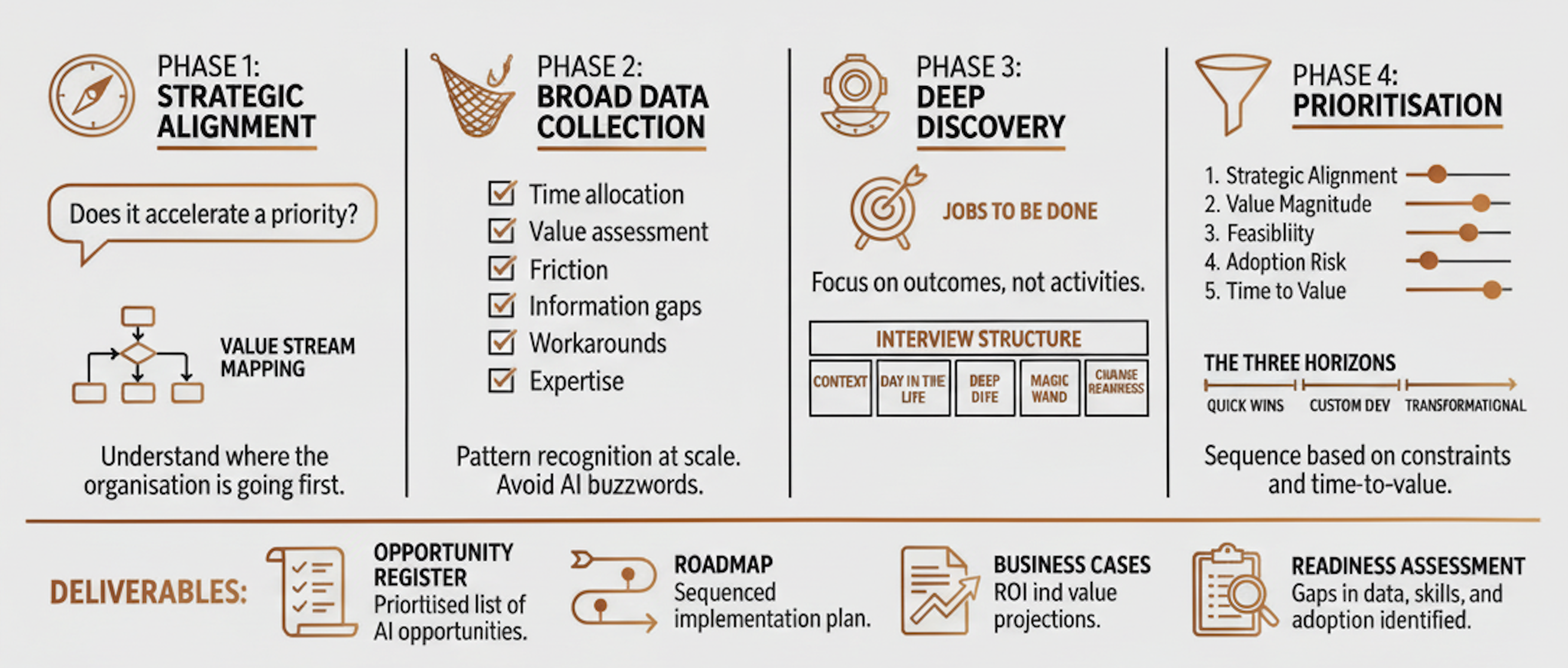

The Four Phases of Structured Discovery

Phase 1: Strategic Alignment

Before asking anyone about their pain points, understand where the organisation is trying to go.

This sounds obvious, but it's routinely skipped. Teams dive into process mapping without first establishing which processes actually matter to the strategy.

Key questions for leadership:

- What are the strategic priorities for the next 2-3 years?

- Where is margin pressure coming from?

- What's constraining growth?

- If a well-funded competitor entered your market tomorrow, what would they do differently?

The answers create a filter. When you later surface dozens of potential AI applications, you can immediately ask: does this accelerate a stated priority, or is it a distraction?

Framework: Value Stream Mapping

Before asking "what's painful?", map how value actually flows through the organisation. Where are the handoffs? Where does work queue? Where is information re-keyed between systems?

This structural view reveals inefficiencies that individuals may not even recognise - they've simply accepted them as "how things work."

Phase 2: Broad Data Collection

Now cast the net wide. The goal is pattern recognition at scale.

The Employee Survey

A well-designed survey can surface insights from hundreds of employees in days. The key is asking the right questions - and critically, not mentioning AI.

Why? Because "where could we use AI?" invites speculation. "What takes too long?" surfaces real problems.

Questions that work:

- Time allocation: List your five most time-consuming weekly activities - this reveals where human effort is concentrated

- Value assessment: For each activity, does this directly create value, or is it necessary overhead? - identifies automation candidates

- Friction: What task feels like it should be easier than it is? - surfaces unmet tooling needs

- Information gaps: What decisions do you make where you wish you had better data? - reveals augmentation opportunities

- Workarounds: What unofficial tools or spreadsheets have you created? - exposes shadow IT and latent demand

- Expertise: What part of your job requires judgment that would be hard to teach? - identifies what should stay human

The last question is as important as the others. Good discovery identifies what AI shouldn't touch as clearly as what it should.

Phase 3: Deep Discovery

Surveys give you breadth. Interviews give you depth.

Jobs to Be Done

Don't just ask people what they do. Ask what they're trying to accomplish.

"When you produce that weekly report, what does success look like? Who uses it? What decisions does it inform?"

This reframes the conversation from activities to outcomes - which is where AI value actually lives. You might discover that a painful manual process exists only to produce a report that nobody reads. The solution isn't to automate the process; it's to eliminate it.

Interview Structure

For a 45-minute interview:

- 5 mins - Context: Role, tenure, team structure

- 15 mins - Day-in-the-life: Walk through a typical week using their calendar as a prompt

- 15 mins - Deep dive: Pick 2-3 activities and explore triggers, inputs, decisions, outputs

- 5 mins - Magic wand: "If you could change one thing..."

- 5 mins - Change readiness: "If we changed this process, what would make adoption easy or hard?"

That final question matters. Technical feasibility is only half the equation. A solution that works but that people won't use is still a failure.

Who to interview:

Don't just talk to executives. Include front-line high performers (who know the real shortcuts), front-line strugglers (who surface training and tooling gaps), and if possible, customers (who provide the external view of what actually matters).

When working with Danone on reducing allergy diagnosis time, understanding the parent's journey - not just the clinical process - was essential. The resulting conversational AI achieved over 90% user recommendation rates because it solved a problem from the user's perspective, not just the organisation's.

Phase 4: Prioritisation That Actually Works

You now have survey data, interview transcripts, process maps, and a long list of potential opportunities. The hard part is deciding what to do first.

Beyond the 2x2

The classic impact/effort matrix is a starting point, but it's too blunt for real decisions. We score opportunities across five dimensions:

- Strategic alignment: Does this directly support a stated business priority?

- Value magnitude: What's the annual impact if solved? (time, cost, revenue)

- Feasibility: Do we have the data, systems, and skills?

- Adoption risk: Will people actually use it? What's the change management lift?

- Time to value: How quickly can we show results?

No single dimension should dominate. A high-value opportunity with severe adoption risk may be a worse bet than a moderate-value opportunity that's easy to implement and builds momentum for larger changes.

Sequencing: Theory of Constraints

Don't just list opportunities - sequence them.

Ask: what's the current bottleneck? If we solve this, what becomes the next bottleneck? Which opportunities are dependencies for others?

This prevents the common failure of optimising something that wasn't actually the constraint. Making one step faster doesn't help if work just queues up at the next step.

The Three Horizons

Structure your roadmap around time-to-value:

- Horizon 1 (0-3 months): Quick wins using existing tools - meeting summarisation, writing assistance, document search. Low risk, visible progress, builds credibility.

- Horizon 2 (3-9 months): Custom development with workflow integration - automated document processing, internal knowledge bases, decision support tools. Requires investment but delivers significant value.

- Horizon 3 (9-18 months): Transformational initiatives - AI-native products, predictive operations, new business models. High investment, high reward, requires organisational readiness built in earlier horizons.

The key insight: plan all three horizons now, even though you'll execute sequentially. Quick wins should compound toward transformation, not become isolated experiments.

For bit.bio, a biotech company that has raised over $100M, we're helping build a data-driven ecosystem with this exact approach - organisation-wide strategy that connects immediate operational improvements to long-term competitive advantage.

The Step Most Organisations Skip: Change Readiness

Technical success without organisational readiness equals shelfware.

For each priority initiative, assess:

- Awareness: Do stakeholders understand why this matters?

- Desire: Do they want to participate?

- Knowledge: Do they know how to work with AI?

- Ability: Do they have time and resources?

- Reinforcement: How will adoption be sustained?

If any of these are weak, address them before or alongside the technical build - not after.

What Good Discovery Delivers

At the end of this process, you should have:

- AI Opportunity Register - 15-30 opportunities, scored and prioritised

- Value Stream Maps - Visual representation of where AI fits in key processes

- 12-Month Roadmap - Sequenced initiatives across three horizons

- Business Cases - For top 3-5 opportunities with ROI projections

- Readiness Assessment - Data, skills, and change management gaps identified

- Governance Recommendations - How to resource, fund, and track AI initiatives

This isn't a report that sits on a shelf. It's an actionable plan with clear ownership and measurable outcomes.

The Pattern

AI that works isn't magic. It's the result of understanding the problem deeply before reaching for solutions, aligning initiatives to strategy rather than chasing shiny objects, building organisational readiness alongside technical capability, and sequencing work so that early wins create momentum for larger transformation.

The organisations getting real value from AI aren't the ones with the biggest budgets or the most advanced technology. They're the ones who did the discovery work to ensure they're solving the right problems.

Atchai helps organisations move from AI ambition to operational reality. If you're ready to identify where AI will deliver the most value - and build a roadmap to get there - get in touch.